Fundamental Security Part Eight – Unified Audit

15/01/2024 3 Comments

January 2024 – Oracle 19C+

If you are Oracle 12.1 onwards, and you have not explicitly disabled it, you are using Unified Audit

We previously discussed Data Encryption At Rest. Now lets talk about Unified Audit, and why you should be using it

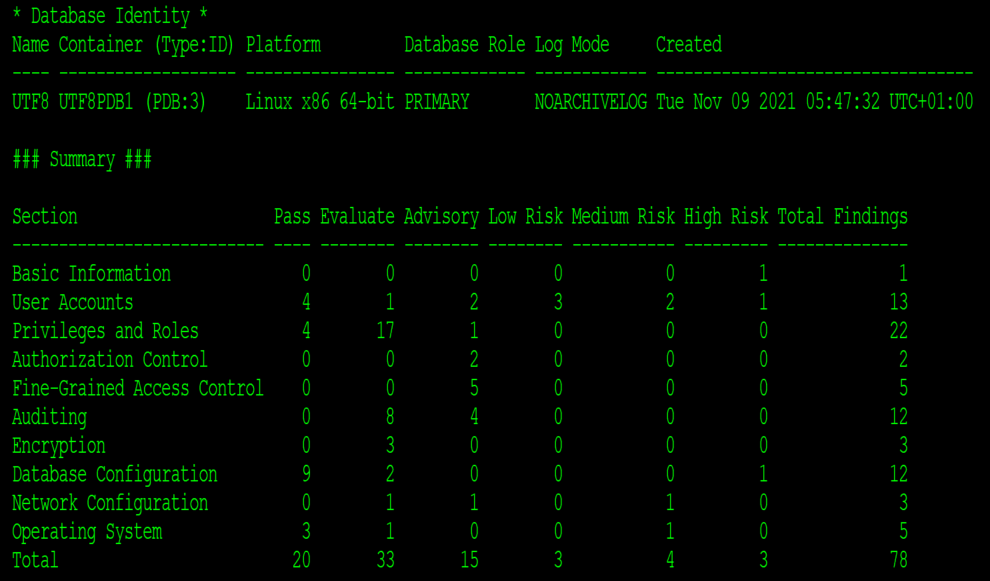

When you create an Oracle database, there is a very small amount of Unified Auditing pre-enabled, and no “traditional” auditing. But why audit? Well, do you know what’s happening in the database? Who is connecting? If anyone is accessing data that they should not? Changing Database Objects? Creating backdoors? What is lurking beneath the surface?

Traditional Oracle Auditing:

It’s deprecated in 21C and desupported in 23C. Stop using it. It’s difficult

to gather all of the data from several diverse locations

in the Database and on the O/S – and much worse if

you have multiple RAC clusters! Convert to Unified Audit.

See MOS: 2909718.1 for a converter

Unified Auditing

For some information about enabling Unified Audit in Pure mode and other bits, check this blog post

Unified Auditing uses Policies to decide what to audit. You can enable and disable these if you have appropriate AUDIT_ADMIN privileges. Typically there are 3 enabled by 23C (the exact enablement varies by release and installed options).

SQL> select * from audit_unified_enabled_policies;

POLICY_NAME ENABLED_OPT ENTITY_NAME ENTITY SUC FAI

ORA_LOGON_FAILURES BY USER ALL USERS USER NO YES

ORA_SECURECONFIG BY USER ALL USERS USER YES YES

ORA$DICTIONARY_SENS_COL_ACCESS BY USER ALL USERS USER YES YES <-23C

ORA_DV_DEFAULT_PROTECTION BY USER ALL USERS USER YES YES <- if DV

ORA_DV_SCHEMA_CHANGES BY USER ALL USERS USER YES YES <- if DV

ORA_LOGON_FAILURES – detects failed logins

ORA_SECURECONFIG – audits high-level DBA type actions, such as ALTER SYSTEM and CREATE USER

ORA$DICTIONARY_SENS_COL_ACCESS – audits access to columns containing password hashes and sensitive data

ORA_DV_DEFAULT_PROTECTION – Oracle Data Vault Only

ORA_DV_SCHEMA_CHANGES – Oracle Data Vault Only

There are a further 10 built-in policies (in 23.3)

SELECT DISTINCT policy_name FROM audit_unified_policies;

POLICY_NAME

ORA$DICTIONARY_SENS_COL_ACCESS

ORA_ACCOUNT_MGMT

ORA_ALL_TOPLEVEL_ACTIONS

ORA_CIS_RECOMMENDATIONS

ORA_DATABASE_PARAMETER

etc...Their precise function can be determined by querying view AUDIT_UNIFIED_POLICIES. The two most notable are ORA_CIS_RECOMMENDATIONS and ORA_STIG_RECOMMENDATIONS. Enabling these two policies, and creating a policy to audit database LOGINS and LOGOUTS (in 23.3, enable ORA_LOGON_LOGOFF) and you will be reasonably covered for basic audit as recommended by the Centre for Internet Security (CIS) and Security Technical Implementation Guide (STIG) – the basic compliance for the US Department of Defense (sic)

SELECT policy_name,audit_option,nvl(object_name,'N/A') object_name,audit_option_type

FROM audit_unified_policies

WHERE policy_name = 'ORA_CIS_RECOMMENDATIONS'

ORDER BY 1,2;

POLICY_NAME AUDIT_OPTION OBJECT_NAM AUDIT_OPTION_TYPE

ORA_CIS_RECOMMENDATIONS ALTER DATABASE LINK NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER FUNCTION NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER PACKAGE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER PACKAGE BODY NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER PROCEDURE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER PROFILE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER ROLE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER SYNONYM NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER SYSTEM NONE SYSTEM PRIVILEGE

ORA_CIS_RECOMMENDATIONS ALTER SYSTEM NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER TRIGGER NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS ALTER USER NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE DATABASE LINK NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE FUNCTION NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE PACKAGE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE PACKAGE BODY NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE PROCEDURE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE PROFILE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE ROLE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE SYNONYM NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE TRIGGER NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS CREATE USER NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP DATABASE LINK NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP FUNCTION NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP PACKAGE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP PACKAGE BODY NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP PROCEDURE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP PROFILE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP ROLE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP SYNONYM NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP TRIGGER NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS DROP USER NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS GRANT NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS REVOKE NONE STANDARD ACTION

ORA_CIS_RECOMMENDATIONS SELECT ANY DICTIONARY NONE SYSTEM PRIVILEGE

To create your own policy is straightforward. Here’s two I recommend creating:

neil_logins: this will capture any connection to the database. As well as identifying all connections (you really want to know who is in the database!), it can also be very helpful when troubleshooting connectivity issues.

neil_any_tab_privs: is anyone using the ANY privilege to access your data? Surely they should be accessing data via explicitly granted privilege. Unless this is a DBA doing some ad-hoc performance tuning or data validation/reconciliation (ideally with an assigned ticket!), you shouldn’t see any records for this policy.

CREATE AUDIT POLICY neil_logons

ACTIONS

LOGON, LOGOFF

CONTAINER=CURRENT;

CREATE AUDIT POLICY neil_any_tab_privs

PRIVILEGES

select any table, read any table, insert any table, delete any table, update any table

CONTAINER=CURRENT;

-- and enable them

audit policy neil_logons;

audit policy neil_any_tab_privs;

Housekeeping

Now you’re gathering audit. Depending upon the nature of the activity in your database, you could have a LOT of data. You really need to ensure you are managing it correctly – starting with housekeeping. First of all, it’s worth putting your audit data into its own tablespace. You also need a steer how much of it to keep within the database for easy access, and ensure it’s easy to tidy up. You also need to consider the overspill area – if you cannot write to the DB (e.g. tablespace full, database in startup, read-only standby), you cannot write the audit. In this case the audit gets written to O/S files in “$ORACLE_BASE/audit/[instance-name]/[PDB-GUID]”, which need loading into the DB to tidy them up too:

Set the partition frequency to daily - each day gets a new partition. This doesn't speed up deletes, but it does make the cleanup tidier. The partition column is EVENT_TIMESTAMP (i.e. don't use EVENT_TIMESTAMP_UTC for your queries):

begin

DBMS_AUDIT_MGMT.ALTER_PARTITION_INTERVAL

(INTERVAL_NUMBER => 1,

INTERVAL_FREQUENCY => 'DAY');

end;

/Move the creation of new partitions to a dedicated tablespace:

begin

DBMS_AUDIT_MGMT.SET_AUDIT_TRAIL_LOCATION

(AUDIT_TRAIL_TYPE => DBMS_AUDIT_MGMT.AUDIT_TRAIL_UNIFIED,

AUDIT_TRAIL_LOCATION => 'audit_tablespace');

end;

/Create a DBMS_SCHEDULER job to tidy up:

BEGIN

dbms_scheduler.create_job(q'[AUDIT_HOUSEKEEPING]',

job_type=>'PLSQL_BLOCK', job_action=>

q'[

BEGIN

-- you need to set retention before calling cleanup

dbms_audit_mgmt.set_last_archive_timestamp

(audit_trail_type => dbms_audit_mgmt.audit_trail_unified

,last_archive_time => trunc(systimestamp - INTERVAL '3' MONTH));

-- and cleanup

dbms_audit_mgmt.clean_audit_trail

(audit_trail_type => dbms_audit_mgmt.audit_trail_unified

,use_last_arch_timestamp => true);

-- and while we are at it, load overspill files into the table

DBMS_AUDIT_MGMT.LOAD_UNIFIED_AUDIT_FILES;

END;

]'

,number_of_arguments=>0

,start_date=>trunc(systimestamp + interval '1' day)

,repeat_interval=> q'[FREQ = DAILY; INTERVAL = 1]'

,end_date=>NULL

,job_class=>q'[SCHED$_LOG_ON_ERRORS_CLASS]'

,enabled=>FALSE

,auto_drop=>FALSE

,comments=> q'[Daily clean-up Unified Audit older than 3 months]'

);

COMMIT;

dbms_scheduler.enable(q'[AUDIT_HOUSEKEEPING]');

END;

/

We’re auditing and we are keeping things tidy. Remember to treat each PDB as a separate database for auditing purposes, and audit the CDB too!

The main thing left to do it actually monitor the audit to see if anything is happening that you don’t expect, such are anyone using SELECT ANY TABLE when they should not be, or modifying objects when there’s no approved change. How you do that is very much site specific and up to you when querying UNIFIED_AUDIT_TRAIL.

Addendum

The thing is, audit isn’t just for the auditors. It can be great for troubleshooting or just trying to work out what is going on in a system.

User can’t connect? Are they in the audit (ACTION_NAME=’LOGON’, maybe with a failed attempt)? If they are then they are getting through, past the listener, to the correct database and you have a RETURN_CODE telling you what the problem is (1005 – they didn’t enter a password, 1017 – wrong password, etc).

What to know exactly what’s going on from a 3rd party or ‘black box’ app? Audit everything (not in Prod!), run it, go have a look. You can see every SQL, every procedure call, every object instantiated or removed, just like this fella

Well that’s the end of this mini series on security – I hope you have implemented EVERYTHING on this list. And remember, this is just the starting point! This is the very minimum you need to be doing. Consider your data and what should and should not be easily available. Think about ensuring the audit is held apart from the database (e.g. through loading into Splunk) to prevent DBA’s from changing it or removing it. Consider exactly what you need to audit AND how to query it to spot anomalies and alert on them in real time. And remember to protect Development every bit as much as Production – hackers love getting into a less secure dev db to work out the DB structures and minimise the time they need to spend in Prod when they finally get there… Good Luck!